Another Perspective on ChatGPT’s Social Engineering Potential

We’ve had occasion to write about ChatGPT’s potential for malign use in social engineering, both in the generation of phishbait at scale and as a topical theme that can appear in lures. We continue to track concerns about the new technology as they surface in the literature.

The Harvard Business Review at the end of last week offered a summary account of two specific potential threats ChatGPT could be used to develop: “AI-generated phishing scams” and “duping ChatGPT into writing malicious code.”

To take the first potential threat, the Harvard Business Review writes, “While more primitive versions of language-based AI have been open sourced (or available to the general public) for years, ChatGPT is far and away the most advanced iteration to date. In particular, ChatGPT’s ability to converse so seamlessly with users without spelling, grammatical, and verb tense mistakes makes it seem like there could very well be a real person on the other side of the chat window. From a hacker’s perspective, ChatGPT is a game changer.” Users rely on nonstandard language and usage errors as a sign of dangerous phishing emails. Insofar as ChatGPT can smooth over the linguistic rough spots, the AI renders phishing more plausible and hence more dangerous.

The second threat is the prospect that the AI could be induced to prepare malicious code itself. There are safeguards in place to inhibit this. “ChatGPT is proficient at generating code and other computer programming tools, but the AI is programmed not to generate code that it deems to be malicious or intended for hacking purposes. If hacking code is requested, ChatGPT will inform the user that its purpose is to ‘assist with useful and ethical tasks while adhering to ethical guidelines and policies.’”

Such measures, however, amount more to inhibitions than impossibilities. “However, manipulation of ChatGPT is certainly possible and with enough creative poking and prodding, bad actors may be able to trick the AI into generating hacking code. In fact, hackers are already scheming to this end.”

The essay concludes with an argument for regulation and codes of ethics that would limit the ways in which the AI tools they develop could lend themselves to abuse in these ways. Ethical practices are always welcome, but there are always going to be bad actors, from criminal gangs to nation-state intelligence services, who won’t feel bound by those constraints. In truth, new-school security awareness training remains an effective line of defense against social engineering of all kinds. It works, after all, against phishing attempts by fluent, human native speakers, and there’s no reason to think that AI is going to outdo all of its human creators in terms of initial plausibility.

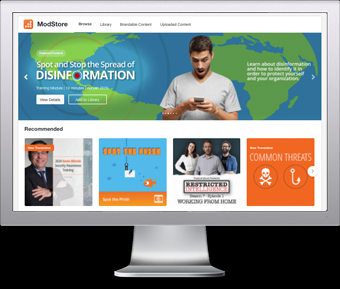

The world’s largest library of security awareness training content is now just a click away!

In your fight against phishing and social engineering you can now deploy the best-in-class simulated phishing platform combined with the world’s largest library of security awareness training content; including 1000+ interactive modules, videos, games, posters and newsletters.

You can now get access to our new ModStore Preview Portal to see our full library of security awareness content; you can browse, search by title, category, language or content topics.

The ModStore Preview includes:

- Interactive training modules

- Videos

- Trivia Games

- Posters and Artwork

- Newsletters and more!

PS: Don’t like to click on redirected buttons? Cut & Paste this link in your browser: https://info.knowbe4.com/one-on-one-demo-partners?partnerid=001a000001lWEoJAAW