AI in 2024: The Top 10 Cutting Edge Social Engineering Threats

The year 2024 is shaping up to be a pivotal moment in the evolution of artificial intelligence (AI), particularly in the realm of social engineering. As AI capabilities grow exponentially, so too do the opportunities for bad actors to harness these advancements for more sophisticated and potentially damaging social engineering attacks. Let’s explore the top 10 expected AI developments of 2024 and their implications for cybersecurity.

1. Exponential Growth in AI Reasoning and Capabilities

As Vinod Khosla of Khosla Ventures points out, “The level of innovation in AI is hard for many to imagine.” AI’s reasoning abilities are expected to soar, potentially outperforming human intelligence in certain areas. This could lead to more convincing and adaptable AI-driven social engineering tactics, as bad actors leverage these reasoning capabilities to craft more persuasive attacks.

2. Multimodal Large Language Models (MLLMs)

MLLMs, capable of processing and understanding various data types, will take center stage in 2024. These models could be used to create highly convincing and contextually relevant phishing messages or fake social media profiles, enhancing the efficacy of social engineering attacks.

3. Text to Video (T2V) Technology

The advancement in T2V technology means that AI-generated videos could become a new frontier for misinformation and deepfakes. This could have significant implications for fake news and propaganda, especially in the context of the 2024 elections, or real-time Business Email Compromise attacks.

4. Revolution in AI-Driven Learning

AI’s ability to identify knowledge gaps and enhance learning can be exploited to manipulate or mislead through tailored disinformation campaigns, targeting individuals based on their learning patterns or perceived weaknesses.

5. Challenges in AI Regulation

Governments’ attempts to regulate AI to prevent catastrophic risks will be a key area of focus. However, the speed of AI innovation will outpace regulatory efforts, leading to a period where advanced AI technologies inevitably will be used in social engineering attacks.

6. The AI Investment Bubble and Startup Failures

The surge in AI venture capital indicates a booming market, but the potential failure of AI startups due to business model obsolescence could lead to a set of orphaned, advanced, but unsecured AI tools available for malicious use.

7. AI-Generated Disinformation in Elections

With major elections scheduled globally, the threat of AI-generated disinformation campaigns is more significant than ever. These sophisticated AI tools are already being used to sway public opinion or create political unrest, and there are 40 global elections in 2024.

8. AI Technology Available For Script Kiddies

As AI becomes more accessible and cost-effective, the likelihood of advanced AI tools falling into the wrong hands increases. This could lead to a rise in AI-powered social engineering attacks by less technically skilled bad actors.

9. Enhanced AI Hardware Capabilities

Jensen Huang, co-founder and chief executive of Nvidia, told the DealBook Summit in late November that “there’s a whole bunch of things that we can’t do yet.” However, the advancements in AI hardware, such as Neural Processing Units (NPU), will lead to faster and more sophisticated AI models. This could allow real-time, adaptive social engineering tactics, making scams more convincing and harder to detect.

10. AI in Cybersecurity and the Arms Race

While AI advancements provide tools for cybercriminals, they also empower more effective AI-driven security systems. This is leading to an escalating arms race between cybersecurity measures and attackers’ tactics, where real-time AI monitoring against AI-driven social engineering attacks might become reality.

The upshot is that 2024 is set to be a landmark year in AI development, with far-reaching implications for social engineering. It is crucial for cybersecurity professionals to stay ahead of these trends, build a strong security culture, adapt their strategies, and keep stepping their workforce through frequent security awareness and compliance training.

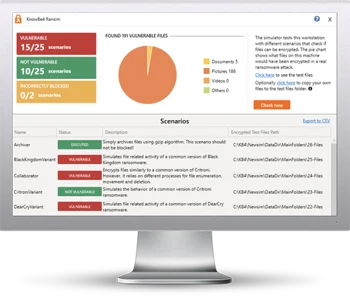

Free Ransomware Simulator Tool

Threat actors are constantly coming out with new strains to evade detection. Is your network effective in blocking all of them when employees fall for social engineering attacks?

KnowBe4’s “RanSim” gives you a quick look at the effectiveness of your existing network protection. RanSim will simulate 24 ransomware infection scenarios and 1 cryptomining infection scenario and show you if a workstation is vulnerable.

Here’s how it works:

- 100% harmless simulation of real ransomware and cryptomining infections

- Does not use any of your own files

- Tests 25 types of infection scenarios

- Just download the install and run it

- Results in a few minutes!

PS: Don’t like to click on redirected buttons? Cut & Paste this link in your browser: https://info.knowbe4.com/ransomware-simulator-tool-partner?partnerid=001a000001lWEoJAAW