Fake ChatGPT Scam Turns into a Fraudulent Money-Making Scheme

Using the lure of ChatGPT’s AI as a means to find new ways to make money, scammers trick victims using a phishing-turned-vishing attack that eventually takes victim’s money.

It’s probably safe to guess that anyone reading this article has either played with ChatGPT directly or has seen examples of its use on social media. The idea of being able to ask simple questions and get world-class expert answers in just about any area of knowledge is staggering. And OpenAI’s latest version ChatGPT 4 is already looking to dwarf the already impressive reputation they’ve established with the initial version.

But cybercriminals are also looking for ways to jump on the AI bandwagon as a means of separating victims from their money. One such scam comes to us by way of security researchers at Bitdefender who have identified a phishing attack that uses ChatGPT theming.

The attack starts with topical subject lines that include “ChatGPT: New AI bot has everyone going crazy about it.” But once the victim clicks the link, they are taken to a bad copy of ChatGPT (that is actually more like the bot-based support chat tools we’ve all seen) that sets the tone to be about making money with a headline of “Earn up to $10,000 per month on the unique ChatGPT platform.”

Using a series of prompt-based interactions (rather than ChatGPT’s freeform dialogue method), the visitor is quickly steered towards making money and – here’s the kicker – shifting the conversation to phone.

Once on the phone, victims are asked about investing in stocks, crypto and oil, and are asked for a minimum investment of €250. Of course, once the “investment” is made, the money is never seen again.

This attack uses interest in making money through breakthrough technology. And, because the attack starts with simply talking about ChatGPT with no context about making money, nearly everyone who’s interested may see this email as an opportunity to find out more.

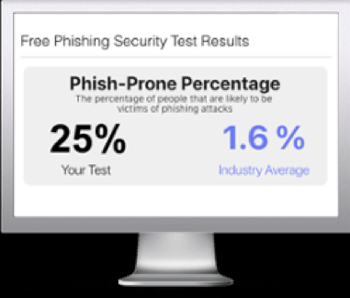

Free Phishing Security Test

Would your users fall for convincing phishing attacks? Take the first step now and find out before bad actors do. Plus, see how you stack up against your peers with phishing Industry Benchmarks. The Phish-prone percentage is usually higher than you expect and is great ammo to get budget.

Here’s how it works:

- Immediately start your test for up to 100 users (no need to talk to anyone)

- Select from 20+ languages and customize the phishing test template based on your environment

- Choose the landing page your users see after they click

- Show users which red flags they missed, or a 404 page

- Get a PDF emailed to you in 24 hours with your Phish-prone % and charts to share with management

- See how your organization compares to others in your industry

PS: Don’t like to click on redirected buttons? Cut & Paste this link in your browser: https://info.knowbe4.com/phishing-security-test-partner?partnerid=001a000001lWEoJAAW