FBI Warns of Cybercriminals Using Generative AI to Launch Phishing Attacks

The US Federal Bureau of Investigation (FBI) warns that threat actors are increasingly using generative AI to increase the persuasiveness of social engineering attacks.

Criminals are using these tools to generate convincing text, images, and voice audio to impersonate individuals and companies.

“Generative AI reduces the time and effort criminals must expend to deceive their targets,” the FBI says. “Generative AI takes what it has learned from examples input by a user and synthesizes something entirely new based on that information.

These tools assist with content creation and can correct for human errors that might otherwise serve as warning signs of fraud. The creation or distribution of synthetic content is not inherently illegal; however, synthetic content can be used to facilitate crimes, such as fraud and extortion.”

The Bureau offers the following advice to help users avoid falling for these attacks:

- Create a secret word or phrase with your family to verify their identity

- Look for subtle imperfections in images and videos, such as distorted hands or feet, unrealistic teeth or eyes, indistinct or irregular faces, unrealistic accessories such as glasses or jewelry, inaccurate shadows, watermarks, lag time, voice matching, and unrealistic movements

- Listen closely to the tone and word choice to distinguish between a legitimate phone call from a loved one and an AI-generated vocal cloning

- If possible, limit online content of your image or voice, make social media accounts private, and limit followers to people you know to minimize fraudsters’ capabilities to use generative AI software to create fraudulent identities for social engineering

- Verify the identity of the person calling you by hanging up the phone, researching the contact of the bank or organization purporting to call you, and call the phone number directly

- Never share sensitive information with people you have met only online or over the phone

- Do not send money, gift cards, cryptocurrency, or other assets to people you do not know or have met only online or over the phone

KnowBe4 empowers your workforce to make smarter security decisions every day. Over 70,000 organizations worldwide trust the KnowBe4 platform to strengthen their security culture and reduce human risk.

The FBI has the story.

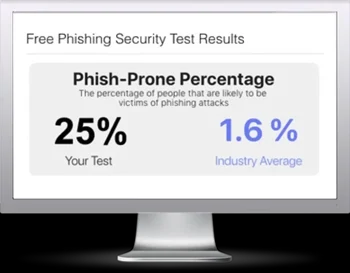

Free Phishing Security Test

Would your users fall for convincing phishing attacks? Take the first step now and find out before bad actors do. Plus, see how you stack up against your peers with phishing Industry Benchmarks. The Phish-prone percentage is usually higher than you expect and is great ammo to get budget.

Here’s how it works:

- Immediately start your test for up to 100 users (no need to talk to anyone)

- Select from 20+ languages and customize the phishing test template based on your environment

- Choose the landing page your users see after they click

- Show users which red flags they missed, or a 404 page

- Get a PDF emailed to you in 24 hours with your Phish-prone % and charts to share with management

- See how your organization compares to others in your industry

PS: Don’t like to click on redirected buttons? Cut & Paste this link in your browser: https://info.knowbe4.com/phishing-security-test-partner?partnerid=001a000001lWEoJAAW